Figure 1 - CPU diagram [1Understanding physical and logical cpus. URL: https://www.linkedin.com/pulse/understanding-physical-logical-cpus-akshay-deshpande.]

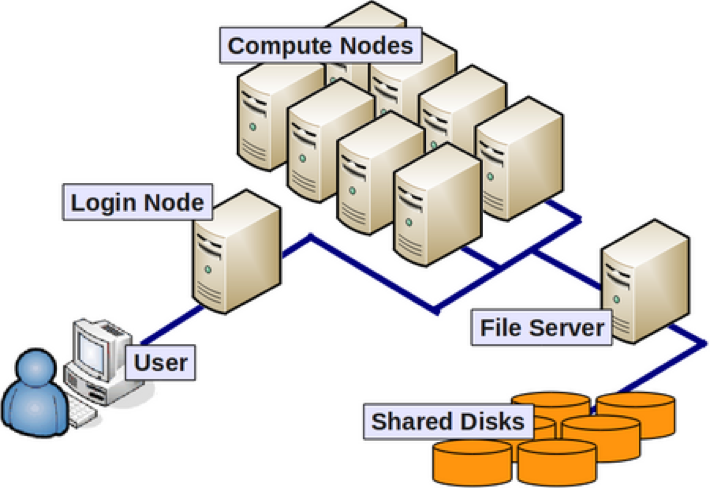

Figure 2 - Accessing a Cluster [2Introduction to high performance computing for supercomputing wales: supercomputing wales introduction. URL: https://supercomputingwales.github.io/SCW-tutorial/01-HPC-intro/.]

Atlas is a Cray CS500 Linux cluster.

Atlas is composed of 244 nodes, all of which contain two 2.40GHz Xeon Platinum 8260 2nd Generation Scalable Processors with 24 cores each, for a total of 48 cores per node.

| Type | Number | RAM | GPUs |

|---|---|---|---|

| login | 2 | 384 GB | |

| data transfer | 2 | 192 GB | |

| standard | 228 | 384 GB | |

| big mem | 8 | 1536 GB | |

| GPU | 4 | 384 GB | 2 NVIDIA V100 GPUs |

Table 1 - Other specifications vary per node type.

$ ssh <SCINet UserID>@Atlas-login.hpc.msstate.edu$ ssh <SCINet UserID>@Atlas-dtn.hpc.msstate.edumsuhpc2#Atlas-dtn.

Figure 3 - Globus Interface Example [3How to log in and transfer files with globus. URL: https://docs.globus.org/how-to/get-started/.]

Atlas uses LMOD as an environment module system.

module load blast or ml blastSome applications are available as containers, self-contained application execution environments that contain the software and its dependencies.

module avail once the user has loaded the singularity module.Atlas uses the Slurm Workload Manager as a scheduler and resource manager.

Slurm has three primary job allocation commands which accept almost identical options.

srun to run one step of an analysissbatch to submit a job array to run the second step of the analysis several times on different dataDownloading Data

cd /home/adam.thrash/training/

curl ftp://ftp.ensemblgenomes.org/pub/plants/release-28/fasta/arabidopsis_thaliana/cdna/Arabidopsis_thaliana.TAIR10.28.cdna.all.fa.gz -o athal.fa.gz

for i in `seq 25 40`; do

mkdir -p data/DRR0161${i};

wget -P data/DRR0161${i} ftp://ftp.sra.ebi.ac.uk/vol1/fastq/DRR016/DRR0161${i}/DRR0161${i}_1.fastq.gz;

wget -P data/DRR0161${i} ftp://ftp.sra.ebi.ac.uk/vol1/fastq/DRR016/DRR0161${i}/DRR0161${i}_2.fastq.gz;

done

/home/adam.thrash/training/athal.fa.gzdata/DRR0161 + the numberRequesting Resources

salloc -A scinet

Output

salloc: Granted job allocation 292472

salloc: Waiting for resource configuration

salloc: Nodes Atlas-0042 are ready for job

scinet accountIndexing Script - /home/adam.thrash/training/index.sh

#!/usr/bin/env bash

# CHANGE TO THE CORRECT DIRECTORY

cd /home/adam.thrash/training/

# PRINT THE HOSTNAME FOR THE EXAMPLE

hostname

# LOAD SINGULARITY AND SALMON

ml singularity salmon/1.3.0--hf69c8f4_0

# INDEX THE TRANSCRIPTOME

salmon index -t athal.fa.gz -i athal_index

singularity module and salmon modulesalmon module is accessible as a container, so singularity has to be loaded firstsalmon indexing commandRunning the Script

srun /home/adam.thrash/training/index.sh

Output

Atlas-0042.HPC.MsState.Edu

WARNING: Skipping mount /apps/singularity-3/singularity-3.7.1/var/singularity/mnt/session/etc/resolv.conf [files]: /etc/resolv.conf doesn't exist in container

index ["athal_index"] did not previously exist . . . creating it

...

salmon Quantification Script - salmon-run.sh

#!/usr/bin/env bash

#SBATCH --account scinet # set correct account

#SBATCH --nodes=1 # request one node

#SBATCH --cpus-per-task=8 # ask for 8 CPUs

#SBATCH --time=0-00:30:00 # set job time to 30 minutes.

#SBATCH --array=0-15 # run 16 jobs of this script

#SBATCH --output=%x.%A_%a.log # store output as jobname.jobid_arrayid.log

#SBATCH --error=%x.%A_%a.err # store output as jobname.jobid_arrayid.err

#SBATCH --job-name="salmon_run" # job name that will be shown in the queue

declare -A rna_files

rna_files['DRR016125']='data/DRR016125/DRR016125_1.fastq.gz data/DRR016125/DRR016125_2.fastq.gz'

rna_files['DRR016126']='data/DRR016126/DRR016126_1.fastq.gz data/DRR016126/DRR016126_2.fastq.gz'

rna_files['DRR016127']='data/DRR016127/DRR016127_1.fastq.gz data/DRR016127/DRR016127_2.fastq.gz'

rna_files['DRR016128']='data/DRR016128/DRR016128_1.fastq.gz data/DRR016128/DRR016128_2.fastq.gz'

rna_files['DRR016129']='data/DRR016129/DRR016129_1.fastq.gz data/DRR016129/DRR016129_2.fastq.gz'

rna_files['DRR016130']='data/DRR016130/DRR016130_1.fastq.gz data/DRR016130/DRR016130_2.fastq.gz'

rna_files['DRR016131']='data/DRR016131/DRR016131_1.fastq.gz data/DRR016131/DRR016131_2.fastq.gz'

rna_files['DRR016132']='data/DRR016132/DRR016132_1.fastq.gz data/DRR016132/DRR016132_2.fastq.gz'

rna_files['DRR016133']='data/DRR016133/DRR016133_1.fastq.gz data/DRR016133/DRR016133_2.fastq.gz'

rna_files['DRR016134']='data/DRR016134/DRR016134_1.fastq.gz data/DRR016134/DRR016134_2.fastq.gz'

rna_files['DRR016135']='data/DRR016135/DRR016135_1.fastq.gz data/DRR016135/DRR016135_2.fastq.gz'

rna_files['DRR016136']='data/DRR016136/DRR016136_1.fastq.gz data/DRR016136/DRR016136_2.fastq.gz'

rna_files['DRR016137']='data/DRR016137/DRR016137_1.fastq.gz data/DRR016137/DRR016137_2.fastq.gz'

rna_files['DRR016138']='data/DRR016138/DRR016138_1.fastq.gz data/DRR016138/DRR016138_2.fastq.gz'

rna_files['DRR016139']='data/DRR016139/DRR016139_1.fastq.gz data/DRR016139/DRR016139_2.fastq.gz'

rna_files['DRR016140']='data/DRR016140/DRR016140_1.fastq.gz data/DRR016140/DRR016140_2.fastq.gz'

declare -a samples=( 'DRR016125' 'DRR016126' 'DRR016127' 'DRR016128' 'DRR016129' 'DRR016130' 'DRR016131' 'DRR016132' 'DRR016133' 'DRR016134' 'DRR016135' 'DRR016136' 'DRR016137' 'DRR016138' 'DRR016139' 'DRR016140' )

cd /home/adam.thrash/training/

ml singularity salmon/1.3.0--hf69c8f4_0

SAMPLE=${samples[$SLURM_ARRAY_TASK_ID]}

read -a FILES <<< ${rna_files[$SAMPLE]}

salmon quant -i athal_index -l A \

-1 ${FILES[0]} \

-2 ${FILES[1]} \

-p $SLURM_CPUS_PER_TASK --validateMappings \

-o quants/$SAMPLE

rna_filessalmonSubmitting the Job Array

sbatch salmon-run.sh

Output

Submitted batch job 292484

Checking the Status of Running Jobs

squeue --me

Output

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

292484_0 atlas salmon_r adam.thr R 0:03 1 Atlas-0047

292484_1 atlas salmon_r adam.thr R 0:03 1 Atlas-0051

292484_2 atlas salmon_r adam.thr R 0:03 1 Atlas-0060

292484_3 atlas salmon_r adam.thr R 0:03 1 Atlas-0067

292484_4 atlas salmon_r adam.thr R 0:03 1 Atlas-0074

292484_5 atlas salmon_r adam.thr R 0:03 1 Atlas-0076

292484_6 atlas salmon_r adam.thr R 0:03 1 Atlas-0079

292484_7 atlas salmon_r adam.thr R 0:03 1 Atlas-0083

292484_8 atlas salmon_r adam.thr R 0:03 1 Atlas-0131

292484_9 atlas salmon_r adam.thr R 0:03 1 Atlas-0136

292484_10 atlas salmon_r adam.thr R 0:03 1 Atlas-0144

292484_11 atlas salmon_r adam.thr R 0:03 1 Atlas-0146

292484_12 atlas salmon_r adam.thr R 0:03 1 Atlas-0149

292484_13 atlas salmon_r adam.thr R 0:03 1 Atlas-0152

292484_14 atlas salmon_r adam.thr R 0:03 1 Atlas-0156

292484_15 atlas salmon_r adam.thr R 0:03 1 Atlas-0158